After rendering lights with forward rendering, I wanted to start implementing deferred rendering. So for week 4, I took a shot at the first pass of deferred rendering.

Before going into deferred rendering, let me point out the disadvantages of forward rendering:

- Each rendered object has to iterate over each light source for every rendered fragment which is a lot!

- Forward rendering also tends to waste a lot of fragment shader runs when multiple objects cover the same screen pixels as most fragment shader outputs are overwritten (lighting calculations were wasted on them).

Deferred rendering/shading tries to overcome the issues of forward rendering which drastically changes the way objects are rendered. It provides several options to significantly optimize scenes which allows us to render hundreds or even thousands of lights with an acceptable framerate. Deferred shading is based on the idea that we defer or postpone most of the heavy rendering, like lighting to a later stage. It consists of two passes, the Geometry pass and the Lighting pass.

1. Geometry pass: We render the scene once and retrieve all kinds of geometrical information from the objects that we store in a collection of textures called the G-buffer. Geometrical information includes position vectors, color vectors, normal vectors and/or specular values. So I wrote a vertex and a fragment shader to do exactly the same. My vertex shader looked almost the same as before, but my fragment shader completely changed. Instead of calculating lighting and outputting the color to the fragments, the fragment shader stored positions, normals, albedo(diffuse color) and specular values to the framebuffer(G-buffer) like a texture data. (G-buffer is the collective term for all textures used to store lighting-relevant data for the final lighting pass)

To store the geometrical information into the G-buffer from the pixel shader, I first had to create a framebuffer, generate and bind the textures to the framebuffer with a color attachment number. I used the same attachment number as the layout location specifier in the fragment shader, something like:

layout (location = 0) out vec3 gPosition;

layout (location = 1) out vec3 gNormal;

The dimensions of all the frame buffers, were exactly the same as the dimensions of the window.

2. Lighting pass: We render a screen-filled quad and calculate the scene’s lighting for each fragment using the geometrical information stored in the G-buffer. We iterate pixel by pixel over the G-buffer. I wrote another vertex shader and fragment shader for the lighting pass. The vertex shader was simple, it just took the positions and texture coordinates as it’s input. The positions and texture co-ordinates were the entire screen coordinates in normalized device coordinates. The vertex shader just set the position of each vertex using gl_position as before and passed the texture coordinates to the fragment shader. The fragment shader in lighting pass is where all the heavy calculations were performed. (I implemented ambient and specular lighting for this week). The main difference between the fragment shader in the forward rendering and deferred rendering was that, in forward rendering, I was sampling diffuse and specular textures from the uniforms currently bound to it. However, in deferred rendering, the textures were sampled from the framebuffer(G-buffer) that were stored in the geometry pass. Along with the textures, I also fetched the fragment’s position vectors and normal vectors from the framebuffer rather than from being passed from the vertex shader like forward rendering.

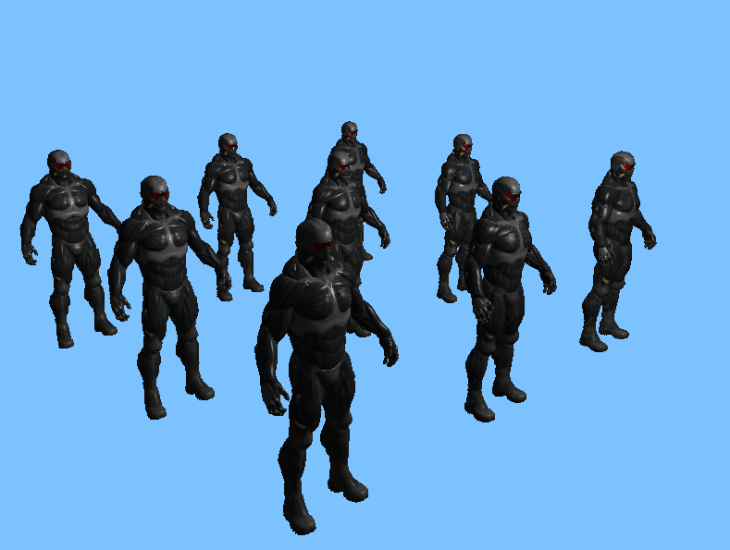

Here is how the output looked after implementing deferred rendering.

The output looks similar to forward rendering. The point is that if we implement n-number of lights with deferred rendering, there wouldn’t be a significant drop in the frame rate which would have not been possible with forward rendering.